Dynamic mapping of an Information System

- Dynamic mapping of an Information System

- Map an Information System

- At a time of accelerating digital transformation, massive movements towards the Cloud, the surge of "as a Service", it seems useful to be able to understand, in general or in detail, the way in which Systems information work.IT architectures are often complex, often still partially or largely "on premise". And even though they are essentially in the Cloud, they tend to continually become more complex (and cost more and more expensive ☹).We propose to dynamically deliver the cartography of an information system, from a "high level" view to a granular view, or vice versa, to allow everyone to access their needs.Our analyzes also make it possible to simplify Information Systems and migrate them to the Cloud in a largely automated way.A technical bias:Automated and exhaustive reverse engineeringof the Information System

- {openAudit} is the combination of technical data lineage and log analysis:

- Technical data lineage to know the origin and future of each piece of information, from its sources to its uses. This data lineage is based on the continuous analysis of flows (procedural code, ETL/ELT, ESB, transformations in the data visualization layer, etc.),

- The analysis of logs to know the real uses of each data: who accesses it, when, how.

- This mapping reflects the exact reality of the System at time “t”:The analysis are carried out continuously, in the delta, in the Cloud, and without the mobilization of teams. Even when the systems are heterogeneous, hybrid.

- A unique know-how to have an overall coherence:

- {openAudit} analyzes views; views of views, etc.,

- {openAudit} fixes other break generators in the lineage: transfers by FTP, cursors, subqueries… which are managed by structural recognition,

- {openAudit} processes SQL (or its derivatives) encapsulated in ELT/ETL jobs,

- {openAudit} processes the dataviz layer to have a true end-to-end, and to highlight all the management rules often contained in the dataviz layer.

- {openAudit} manages dynamic procedures by processing the run in parallel,

- 3 main use cases

- Use case #1: share with everyone the understanding of the Information SystemThe data lineage makes it possible to know the progress of each piece of information in the System.We offer different views that allow you to present the results differently, including 2 main ones:

- A granular data lineage allowing to act on the feed chains in case of problem,

- An end-to-end impact analysis to control regressions, or to identify the dissemination of information under the GDPR, etc.

- Use case #2: identify dead material to reduce IT debtThe intersection of data lineage and analysis of the uses of information makes it possible to detect the "living branches" of the Information System, and conversely the "dead branches".On average, 50% of what is stored in an Information System can be discarded via mass decommissioning: tables, views, procedural code, ELT/ETL jobs, dashboards.Use case #3: automatically migrate a System to the CloudCloud migrations are never easy. {openAudit} deconstructs the processing of information in the feeds but also in the dataviz layer on legacy technologies (example: Cobol, PL/SQL coupled with SAP BO, etc.), then reconstructs the flows on the fly, but also dashboards in target technologies.90% of a migration can be done automatically.

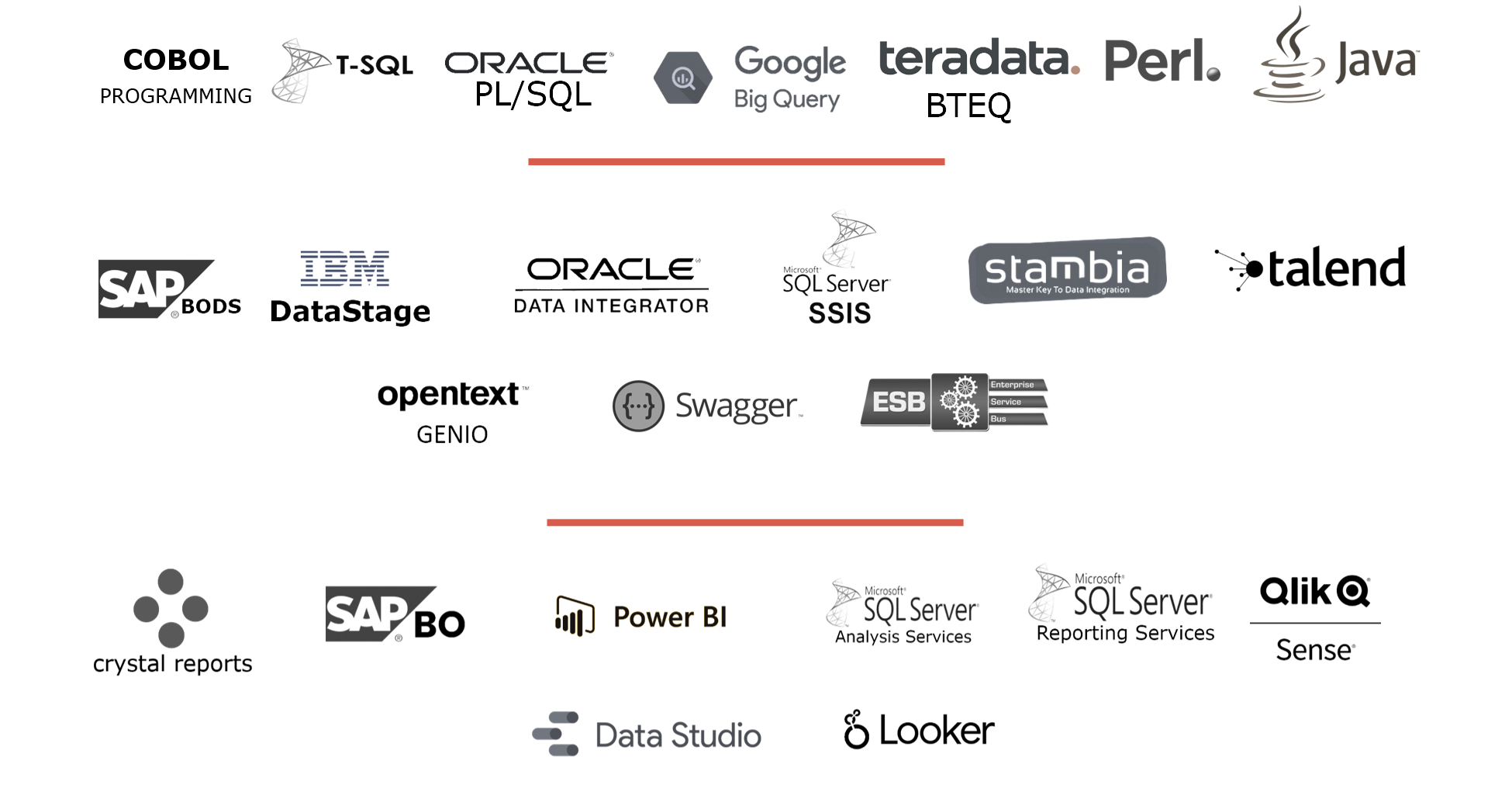

- Technologies analyzed:

- Conclusion :The complexity of Information Systems is not inevitable, and should no longer be an obstacle. We are convinced that by bringing out all the underlying techniques in an intelligible way, and by sharing them with everyone, the systems can very naturally harmonize over time.And to transform them quickly, reducing IT debt and automating migrations will be valuable allies.

Commentaires

Enregistrer un commentaire