Reduce IT Debt, why?

- Reduce IT debt, why?

- What is IT debt?

- Debt in legacy systems,the most blocking!

Companies that have made heavy investments in digital transformation programs often find that things do not always progress according to the initial roadmap.

One of the major reasons is the strong dependence on certain technologies that have been there almost forever, legacy, ie IT debt!But these systems continue to work. We continue to make do, and long-awaited major digital transformation projects are sometimes continually postponed.“Debt under construction” in the Cloud,the most worrying...

Considering that Cloud migration is the most structuring point of major digital transformation projects, it must be admitted that the companies which have implemented these migration projects are now numerous.

One of the many positive impacts is that businesses now have easier access to data: there is a shift in data management towards them in a domain-oriented, self-service design of IT: for some large companies, this is the advent of so-called “data mesh” architectures : each domain manages its own data pipelines. The “fabric” that connects these domains (and their associated data resources) consists of a universal interoperability layer.This is often positive for business, but data pipelines tend to overflow! We are heading straight towards the reconstitution of a “mega IT debt” made up of replicated or unused flows and reinvented calculation rules.Overall, many companies are moving towards hyper-complexification of their Cloud systems with often unsustainable associated costs!______

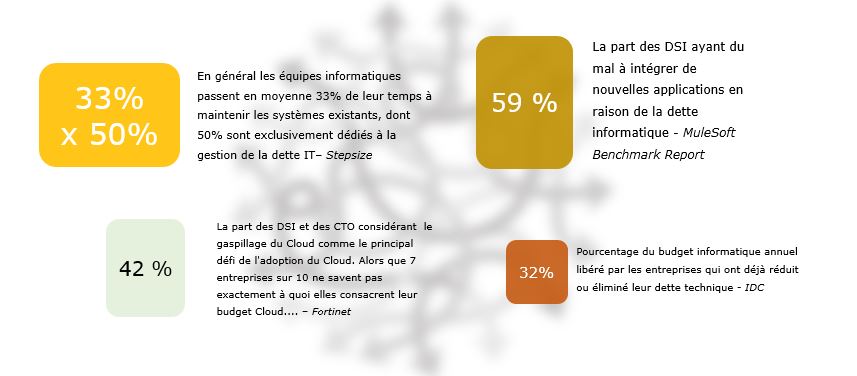

{openAudit} makes it possible to simplify legacy systems, to migrate them, but also to contain Cloud systems.In short, a real, “sustainable” IT debt reduction program for “sustainable IT”!Some statistics:

- Reduce IT debt at high speed,methodology

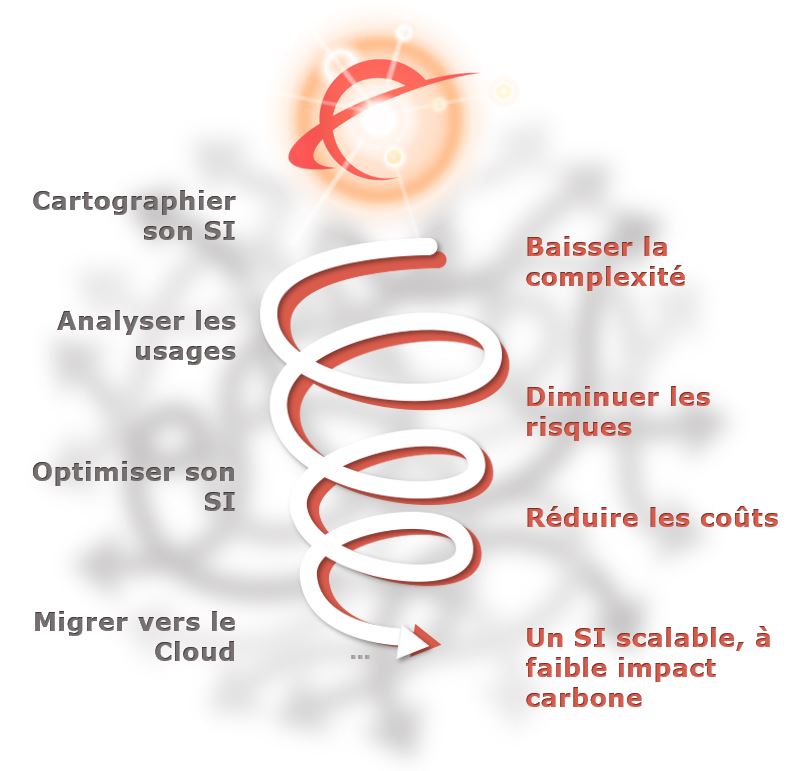

- 1- Break down complexity, by sharing a dynamic map of the Information System with everyoneThe mechanics are always simple in theory: the data enters the Information System, it is processed, and it ends up being consumed. Except that the reality is obviously a little more complex.

- Our bias is to do dynamic reverse engineering throughout the data transformation processes, from the feed layers to the dashboard cell, by treating all the breakage generators. This is called “data lineage”.

Enhanced with a search engine, our software {openAudit} defines for each data, its origin, its future, its use and all the management rules which brought it from one side of the Information Systems to the other . The views are oriented to the business or IT choice. - 2- Identify the data actually used by the businessesAll of the databases offer audit bases that {openAudit} scans continuously to identify what the dataviz solutions are requesting, or to highlight the data that is the subject of ad hoc queries.

- This approach makes it possible to discriminate the “data points” which are actually used within the processing chains. We quickly realize that an innumerable number of flows are added to the plan unnecessarily.

- 3- Identify the sources of data used

vs. “dead branches” to reduce IT debtStarting from the data having a use and identifying all of their sources, {openAudit} will identify all the code, tables, files, ie the complete "branches" of the system which have a real use. - Conversely, {openAudit} will identify "dead branches" of the system, to allow extensive decommissioning.These decommissionings can be implemented in on-premises systems, but also in the Cloud, by attaching financial indicators using the information available in the Stackdriver (GCP), in Cloudwatch (AWS), or even in Azure Monitor (MS) .The data visualization layer is also analyzed by {openAudit}, which makes it possible to detect replication and obsolescence in dataviz tools and to make massive simplifications.

- Catalyze transformationdigital of a company inautomating Cloud migrations

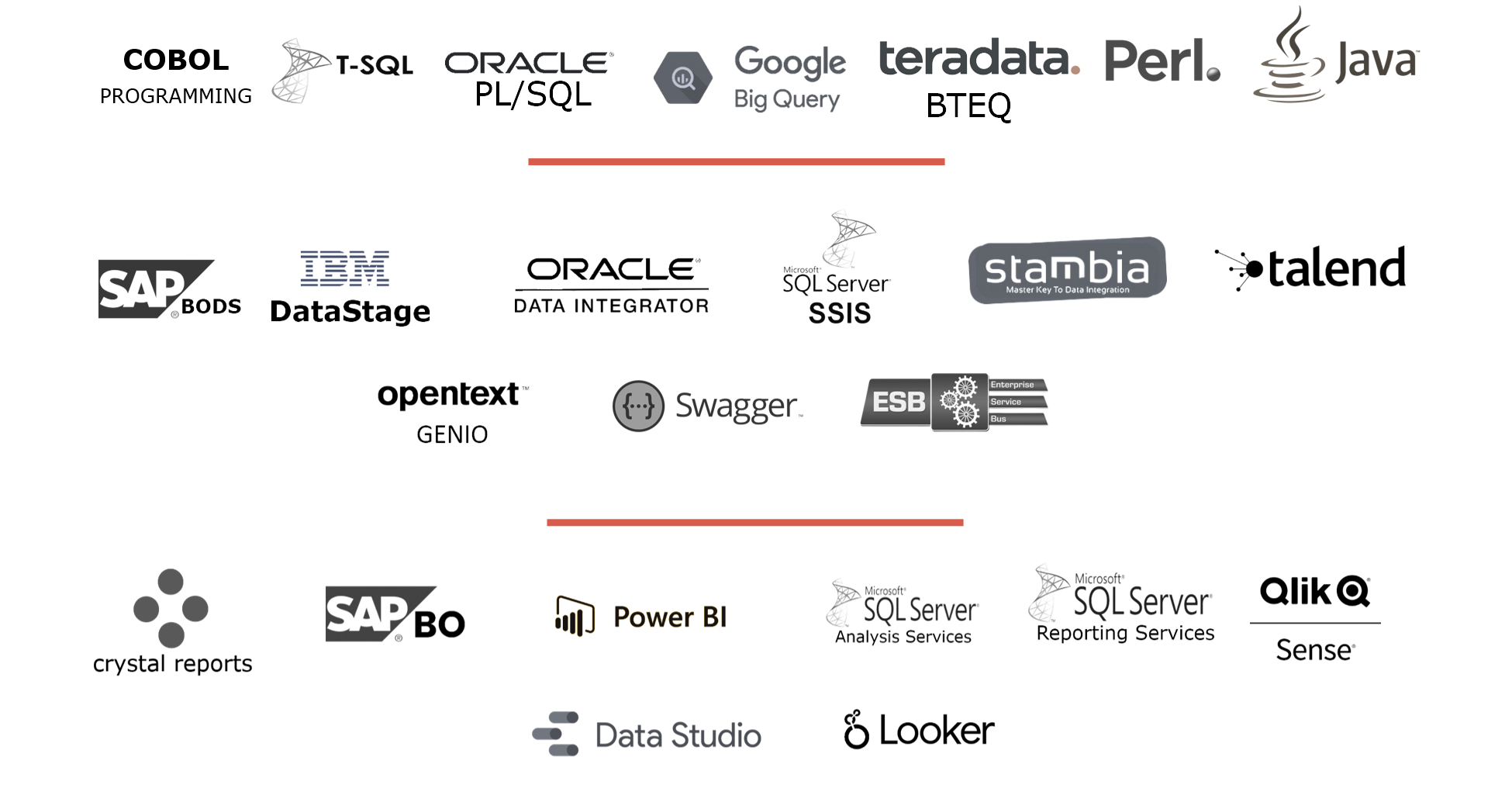

- One of the major axes of IT transformation remains migration to the Cloud. Unfortunately, rewriting millions of lines of code or rebuilding dashboards in the target technology are major obstacles, especially when the assets are substantial.1- Migrate the code{openAudit} will “parse” T-SQL, PL/SQL, Teradata Bteq, Cobol, Perl, etc., and any other procedural language massively present in legacy systems and will break down all the complexity of the code in source.

{openAudit} will deduce the overall kinematics and intelligence, to reproduce it in the target technology.2- Migrate dataviz technologies{openAudit} will analyze in a granular way the intelligence of a dashboard, but also the "template" of the source technology to dynamically reconstruct it in the target technology and thus save teams 95% of the migration effort.

- Technologies addressed:

- ConclusionThe simplification of an Information System, ie the reduction of IT debt through detailed introspection of processes obviously has innumerable virtues: reduction in maintenance, reduction in licensing, reduction in invoicing in the Cloud, reduction in risks ... etc.

For companies with largely “on-premises” Information Systems, it is also the assurance of optimizing their Cloud migration to move towards infinitely more efficient systems (scalability, sharing of business knowledge, etc.). Once in the Cloud, it will be a question of containing information inflation and therefore Cloud costs which are becoming a thorny problem for many companies.

And there is a benefit which is less visible, it is the lasting reduction in the ecological footprint of an Information System thanks to its permanent rationalization.www.ellipsys-lab.comcontact@ellipsys-lab.com

Commentaires

Enregistrer un commentaire